A data center is a facility housing servers and networking equipment used to store, process, and distribute data. Modern data centers leverage cloud architecture and virtualization. There are 4 standards of data centers, classified from Tier 1 to Tier 4. There are 6 types of data centers: enterprise, cloud, colocation, hyperscale, edge, and modular.

Data centers serve 4 main functions: network connectivity, data storage, website hosting, and backup and disaster recovery. They enable website hosting by storing and delivering website files through systems of web servers. Continuous internet connectivity ensures these websites stay accessible online. Routers and switches are the 2 key components that route network traffic. 4 other essential components are servers, cabling, power, and cooling systems. Data centers connect to Internet Service Providers (ISPs) through edge routers and fiber optics, which form the backbone of the internet.

Data centers are able to improve latency in 3 ways: physical location optimization, efficient routing and traffic management, and by leveraging content delivery networks (CDNs). Data centers have an important impact on web hosting performance. The quality of infrastructure of a data center directly affects server uptime. The location of a data center directly impacts web host server speed. Choosing the best data center locations for your web host depends on 3 key factors: geographical proximity to your audience, data center standard, and local privacy regulations.

table of contents

- What Is a Data Center?

- What Are the Different Types of Data Centers?

- What Are Data Centers Used For?

- How Do Data Centers Enable Website Hosting?

- What Networking Devices Allow Data Centers to Route Traffic?

- What Connects Data Centers to ISP?

- Can Data Center Impact Web Hosting Performance?

- What Makes the Best Data Center Location for Web Hosting?

What Is a Data Center?

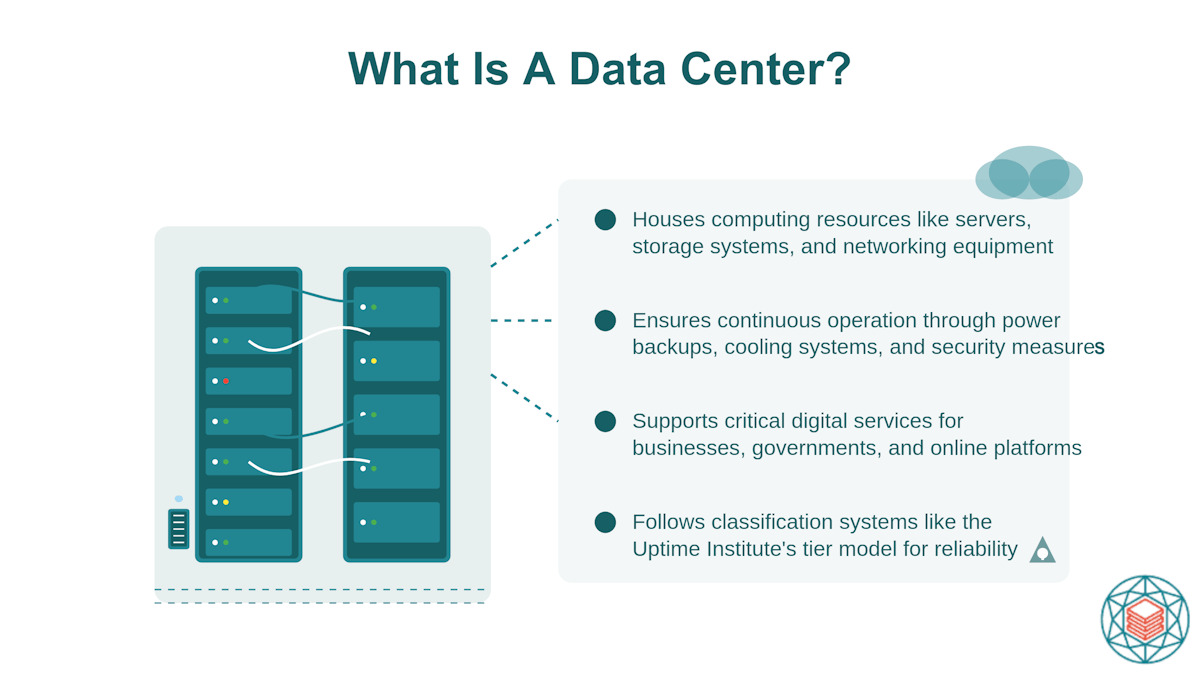

A data center (also called a datacenter) is a facility that houses computing resources like servers, storage systems, and networking equipment to store, process, and distribute data. This provides and protects the infrastructure that powers websites, applications, and cloud services.

Data centers ensure continuous operation through power backups, cooling systems, and security measures. Businesses, governments, and service providers increasingly use modern data centers to manage IT workloads, host online platforms, and support critical digital services. Data centers observe certain standards that draw from redundancy-focused classification systems like the Uptime Institute’s tier model. These standards help businesses choose a data center based on performance, reliability, and uptime requirements.

What Is a Modern Data Center?

A modern data center is a facility that uses cloud computing and virtualization to improve scalability, efficiency, and resource management. Unlike traditional data centers that rely on dedicated physical servers in a single location, modern data centers distribute computing resources across multiple sites using cloud infrastructure. This enables on-demand access to storage, processing power, and applications. Virtualization allows multiple virtual machines to run on a single server, reducing hardware dependency and optimizing resource use.

Modern data centers leverage other forms of cutting-edge technology like automation, AI-driven security, biometric access controls, and energy-efficient cooling systems such as liquid cooling and hot aisle containment. These advancements further enhance performance and reduce operational costs.

What Are the Different Standards of Data Centers?

There are 4 different standards of data centers, classified by the Uptime Institute as Tier 1 to Tier 4. Tier 1 data centers have basic infrastructure with no redundancy, Tier 2 adds up fault tolerance with redundant power and cooling, Tier 3 provides high availability with multiple redundant systems. Tier 4 offers the highest reliability with fully redundant, fault-tolerant infrastructure.

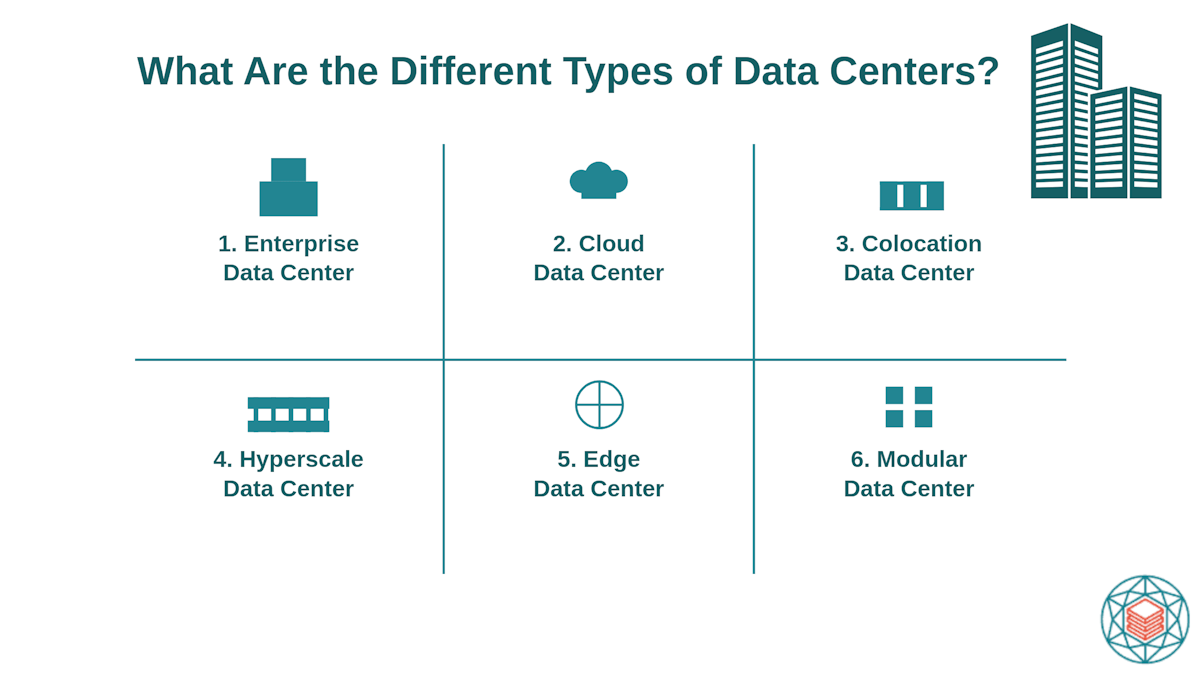

What Are the Different Types of Data Centers?

Different types of data centers refer to differences in how they are owned, operated, and structured. This enables data centres to cater to a range of business and technological needs (e.g. private use, cloud services, or shared infrastructure). There are 6 types of data centers:

- Enterprise data center

- Cloud data center

- Colocation data center

- Hyperscale data center

- Edge data center

- Modular data center

1. Enterprise Data Center

An enterprise data center is a privately owned facility built and managed by a single organization to support its internal IT operations. It provides dedicated resources, full control over security, and compliance with specific business requirements. Large corporations, financial institutions, and government agencies typically use enterprise data centers.

2. Cloud Data Center

A cloud data center is a remote facility operated by cloud service providers. Well-known cloud service providers include AWS (Amazon Web Services), Google Cloud, and Microsoft Azure. It offers scalable computing resources on demand, which eliminates the need for businesses to maintain physical server infrastructure. Cloud data centers support distributed workloads, disaster recovery, and global accessibility.

3. Colocation Data Center

A colocation data center (also called a multi-tenant data center) is a shared facility where businesses rent space for their servers and IT equipment. The provider supplies power, cooling, and network connectivity, while customers retain control over their hardware. Colocation reduces costs and improves reliability without requiring a company to build its own data center.

4. Hyperscale Data Center

A hyperscale data center is a massive facility designed for cloud computing, big data processing, and large-scale applications. Companies like Amazon, Google, and Meta operate hyperscale data centers to handle millions of users and high-performance workloads. These data centers use automation, advanced cooling, and custom hardware.

5. Edge Data Center

An edge data center (also called a micro data center) is a small facility located near end users to reduce latency. End-user devices, IoT sensors, and applications generate data that the edge centers filter, analyze, and optimize locally. These centers handle real-time tasks before transmitting only essential information to larger centralized data centers like enterprise or hyperscale facilities. This setup reduces latency, enhances performance, minimizes bandwidth usage, and ensures fast response times for applications like streaming, cloud gaming, autonomous vehicles and the IoT (Internet of Things).

6. Modular Data Center

A modular data center is a preassembled, self-contained facility that is able to be quickly deployed and scaled as needed. It consists of standardized, prefabricated modules (often housed in shipping container-like structures) that come with built-in power, cooling, and IT infrastructure. These modular units are transported and installed in various locations. They are ideal for temporary deployments, disaster recovery, and remote areas where building a traditional data center is impractical.

What Are Data Centers Used For?

Data centers are used for 4 primary functions.The first function is network connectivity. Data centers act as central hubs that route internet traffic. This connects businesses, applications, and users through high-speed fiber-optic networks and networking devices like routers and switches. The second function is data storage and processing. They house powerful servers that store, manage, and process vast amounts of data for businesses, governments, and cloud services.

The third function is website hosting. Web hosting refers to a web service where a provider helps individuals and businesses get their websites on the internet. The fourth function is backup and disaster recovery. Companies use data centers to store backup copies of critical data and maintain disaster recovery solutions. This protects against expensive hardware failures, cyberattacks, and unexpected outages.

How Do Data Centers Enable Website Hosting?

Data centers enable website hosting by housing and maintaining the web servers that store and deliver website content. A web server is a combination of hardware and software that processes user requests and serves website data.

Data centers support web hosting in 2 ways. First, they store website files, including HTML, CSS, JavaScript, images, and databases, ensuring data is securely maintained. Second, data centers deliver website content by processing browser requests, retrieving the necessary files, and transmitting them to users’ devices over the internet. Web hosting companies manage the data center’s server infrastructure, networking, and software needed for website deployment.

How Do Data Centers Make Websites Accessible on the Web?

Data centers ensure websites remain accessible by providing continuous internet connectivity and minimizing downtime. Web servers are connected to the internet through high-speed fiber-optic networks and networking devices like routers and switches that manage data flow efficiently. To maintain 24/7 website availability, data centers use redundant power supplies, cooling systems, and security measures that prevent disruptions caused by hardware failures, cyber threats, or network congestion.

What Networking Devices Allow Data Centers to Route Traffic?

Networking devices are hardware components that manage data flow between computers, servers, and other systems within a network. The 2 primary networking devices that route traffic are routers and switches.

What Is the Role of Routers?

The role of routers is to effectively manage the flow of data between networks. This involves determining the most efficient path for each packet, distributing workloads and optimizing performance. 2 common types of router used are edge routers and core routers. Edge routers connect the data center to an internet service provider (ISP) to ensure external access for websites and applications. Core routers handle high-speed traffic within the data center, which links different network segments.

What Is the Purpose of Switches?

The purpose of switches is to enable direct communication between devices inside a data center. They connect servers, storage systems, and other networked hardware for fast, secure and efficient data transfer. Switches operate within a local network to reduce congestion and improve data flow, unlike routers which manage traffic between networks.

What Are the Other Components of a Data Center?

There are 4 other components of a data center aside from routers and switches. The first component is servers, which form the core of a data center’s computing power. A server processes and stores data, and hosts applications, websites, and cloud services. An average onsite data center holds between 2,000 – 5,000 servers, according to IBM.

The second component is cabling, which connects networking devices and ensures high-speed communication between servers and external networks. The third component is power systems, which keep operations running even during electrical failures. This includes backup generators and uninterruptible power supplies (UPS). The fourth component is cooling systems, which regulate data center temperature and prevent overheating.

What Connects Data Centers to ISP?

An Internet Service Provider (ISP) is a company that provides internet access to businesses and individuals. Data centers are connected to ISP through edge routers and high-speed fiber optic networks. Edge routers serve as the gateway between a data center’s internal network and the ISP, and direct incoming and outgoing internet traffic. High-speed fiber optic cables carry data over long distances with minimal delay for fast and reliable connectivity. Together, data centers and ISPs form the backbone of the internet.

How Can Data Centers Improve Network Latency?

Network latency refers to the time it takes for data to travel between a source and a destination over a network. Improving latency refers to reducing this time. Data centers can improve network latency in 3 ways.

The first way is physical location optimization, which places data centers closer to users. This shortens the distance data needs to travel, thereby improving server response times. The second way is efficient routing and traffic management. This means using advanced network protocols and load balancing to prevent traffic congestion. The third way is by leveraging content delivery networks (CDNs). This system stores cached copies of website content across multiple locations, letting users access data from a nearer server.

Can Data Center Impact Web Hosting Performance?

Yes, data centers can impact web hosting performance. Web hosting performance is best understood through 2 indicators: server uptime and server speed. Server uptime refers to the percentage of time the host server remains fully operational without interruptions. Server speed refers to the amount of time taken for the host server to respond to requests. This is also known as server response time and measured in milliseconds (ms). Both metrics combined offer a good indicator of a quality web host service.

Web hosting server uptime is directly impacted by the infrastructure of the data center. Web hosting server speed is directly impacted by the location of the data center.

How Does Data Center Infrastructure Affect Web Hosting Performance?

Data center infrastructure affects web hosting performance by determining the reliability of servers, thus directly affecting the uptime of their client’s hosted websites and applications.

Estimates of uptime by data center tier are outlined in an official 2008 whitepaper by the Uptime Institute titled “Tier Classifications Define Site Infrastructure Performance”. According to the report, Tier 1 data centers expect an uptime of 99.67% and around 28.8 hours of downtime per year. Tier 2 data centers have an uptime to 99.75% and reduce annual downtime to about 22 hours. Tier 3 data centers have 99.98% uptime and limit downtime to approximately 1.6 hours per year. Tier 4 data centers have 99.99% uptime and only 0.8 hours of downtime annually. Higher-tier data centers empower web hosts to provide stronger uptime guarantees to customers.

How Does Data Center Location Affect Web Hosting Performance?

Data center location affects web hosting performance because of the effect of latency. Greater physical distance between the data center and end users causes longer delays in data delivery.

Data center location is important because it determines server proximity relative to 2 things: website visitors, and major internet exchange points (IXPs).

In web hosting, high latency between the web server and website visitors leads to slow server response times and sluggish website loading. Hosting from a data center location near website visitors improves response times and site speed.

Proximity to major internet exchange points (IXPs) further enhances performance by reducing network hops between the server and end users.

What Makes the Best Data Center Location for Web Hosting?

The best data center location for web hosting considers 3 things. The first factor is close proximity to your website’s target audience. The second is a high data center standard that is Tier 3 or Tier 4. The third is a level of privacy compliance that meets your particular business or industry needs. Privacy compliance (PIPEDA, GDPR, HIPAA) is typically determined by the data center location’s jurisdiction. Choosing the best server location ultimately depends on your website’s type and priorities. Compliance is more critical for sites handling sensitive data, while audience proximity is more critical for eCommerce sites requiring speed.